Guide to Learning [Specific Programming Language, e.g., JavaScript]

Understanding the Fundamentals of Layering

Layering, at its core, represents an organizational strategy for managing complexity in software systems through interconnected yet distinct components. This architectural pattern enables modular design principles, where each layer concentrates on a narrow set of responsibilities. This compartmentalization allows development teams to troubleshoot and enhance specific areas without causing ripple effects throughout the entire system - a critical advantage for maintaining development velocity.

The modular nature of layered architectures offers two significant benefits: scalability and adaptability. When system requirements evolve, modifications typically remain confined to individual layers, dramatically reducing regression risks. This structured problem-solving methodology has become fundamental to contemporary software engineering practices across industries.

Defining the Key Components of a Layered System

Modern layered systems typically comprise multiple specialized tiers, each with clearly delineated responsibilities. Common layers include presentation (user interface), business logic (application rules), and data access (persistence mechanisms). The precise boundaries and interaction protocols between these layers often determine the system's long-term maintainability and performance characteristics.

The Importance of Clear Communication Between Layers

Inter-layer communication forms the nervous system of any layered architecture. Well-defined interfaces and strict protocol adherence between layers act as guardrails, preventing error propagation that could compromise system stability. These communication contracts should be documented as rigorously as the layers themselves, with versioning strategies to accommodate future evolution.

Implementing Robust Data Handling Across Layers

Data integrity safeguards must permeate every architectural layer. Comprehensive validation routines, transformation pipelines, and error recovery mechanisms should be implemented at layer boundaries to maintain data fidelity throughout the system's lifecycle. This includes encryption standards for sensitive data, schema validation for structured information, and graceful degradation protocols for partial system failures.

Ensuring Scalability and Maintainability of Layered Systems

The true test of a layered architecture emerges during scaling events. Properly designed layers can scale horizontally (adding more instances) or vertically (enhancing existing instances) independently. This elasticity allows organizations to allocate resources precisely where needed, optimizing infrastructure costs while maintaining performance service-level agreements.

Addressing Security Concerns in a Multi-Layered Environment

Security in layered systems requires a defense-in-depth approach. Each layer should implement security controls appropriate to its risk profile, creating multiple barriers against potential breaches. This includes authentication/authorization at entry points, input sanitization in processing layers, and encryption for data at rest and in transit. Regular penetration testing should validate these protections against emerging threats.

Testing and Validation of Layered System Functionality

Testing strategies for layered systems should mirror the architecture itself. Unit tests verify individual layer functionality, integration tests validate inter-layer communication, and end-to-end tests confirm system-wide behavior. Automated testing pipelines should execute these tests continuously, with particular attention to failure scenarios at layer boundaries where issues often emerge.

DOM Manipulation: Interacting with the Web Page

Understanding the Document Object Model (DOM)

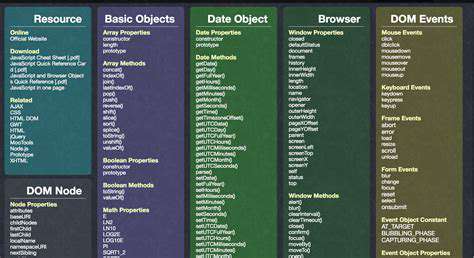

The Document Object Model (DOM) serves as the programmatic bridge between static markup and dynamic interactivity. By representing HTML documents as node trees, it enables JavaScript to surgically modify page content without full reloads. This capability underpins modern web applications, transforming static pages into responsive interfaces that react to user input in real-time.

DOM operations follow predictable patterns: selection, inspection, and modification. Mastery of these patterns separates basic scripting from professional front-end development. The DOM API continues evolving, with modern additions like Shadow DOM expanding encapsulation possibilities for complex component architectures.

Selecting and Modifying Elements

Element selection forms the foundation of DOM manipulation. Modern JavaScript offers multiple selection strategies - from traditional getElementById to versatile querySelectorAll. Each method carries performance implications that become critical in complex applications.

Beyond basic text manipulation, professional DOM work involves understanding reflow and repaint cycles. Efficient updates minimize browser rendering work, crucial for maintaining 60fps animations. Techniques like document fragments and virtual DOM implementations help batch changes for optimal performance.

Working with Events and Attributes

Event handling transforms static pages into interactive experiences. The event lifecycle - capturing, targeting, and bubbling - provides multiple interception points for custom behavior. Modern practices favor event delegation over individual handlers, especially for dynamic content.

Data-* attributes have emerged as a powerful pattern for embedding information directly in markup. When combined with proper accessor methods, they enable clean separation between presentation and logic while maintaining accessibility.

Innovative problem-solving often demands departure from well-trodden paths and exploration of unconventional approaches. The most elegant solutions frequently emerge when we challenge assumptions and examine problems through fresh perspectives. This creative process requires both courage to venture beyond comfort zones and discipline to evaluate alternatives objectively.

Event Handling: Responding to User Interactions

Event Handling Fundamentals

Event-driven programming represents a paradigm shift from linear execution flows, instead structuring application behavior around user actions and system notifications. This model aligns perfectly with graphical interfaces, where unpredictable user input drives application state changes.

Effective event handling requires understanding the complete event lifecycle - from initial trigger through propagation to final cleanup. Modern frameworks abstract much of this complexity, but underlying principles remain essential for debugging edge cases.

Types of Events and Their Significance

The browser event ecosystem includes dozens of specialized event types, from basic clicks to sophisticated gesture recognitions. Input events particularly require careful handling to accommodate diverse devices and accessibility needs.

Custom events enable sophisticated component communication patterns. When combined with proper typing and payload definitions, they create clean interfaces between application modules while maintaining loose coupling.

Implementing Event Handlers Effectively

Professional event handling balances several concerns: performance (through delegation), memory management (proper listener cleanup), and accessibility (keyboard support). The rise of passive event listeners reflects ongoing optimization efforts for scroll performance.

Error boundaries around event handlers prevent isolated failures from crashing entire applications. Logging systems should capture sufficient context to reproduce issues while respecting user privacy constraints.

Read more about Guide to Learning [Specific Programming Language, e.g., JavaScript]

Hot Recommendations

- How to Stay Productive While Working Remotely

- Tips for Managing Conflict with Coworkers

- Entrance & Certification Exams (升学考试)

- How to Improve Your Storytelling Skills (Speaking)

- How to Find Profitable Side Hustles

- Tips for Preparing for the TOEFL iBT Home Edition

- Guide to Switching Careers from [Industry A] to [Industry B]

- How to Run an Effective Hybrid Meeting

- Tips for Marketing Your Side Hustle on Instagram

![Best Prep Courses for the GMAT [2025]](/static/images/32/2025-05/BeyondtheClassroom3AAdditionalResourcesforGMATSuccess.jpg)

![Guide to Learning [Specific Photography Niche, e.g., Portrait Photography]](/static/images/32/2025-05/CompositionTechniquesforVisuallyAppealingPortraits.jpg)

![How to Ace Your Next Job Interview [Tips & Tricks]](/static/images/32/2025-05/BeyondtheInterview3ABuildingYourNetworkandFollowingUp.jpg)